Model Serving for Any LLM

Low-latency. High-throughput. Cloud-agnostic. Declaratively ship LLM applications with built-in serving and integration APIs, ready for enterprise scale across any cloud, VPC, on-prem, or edge.

As seen on

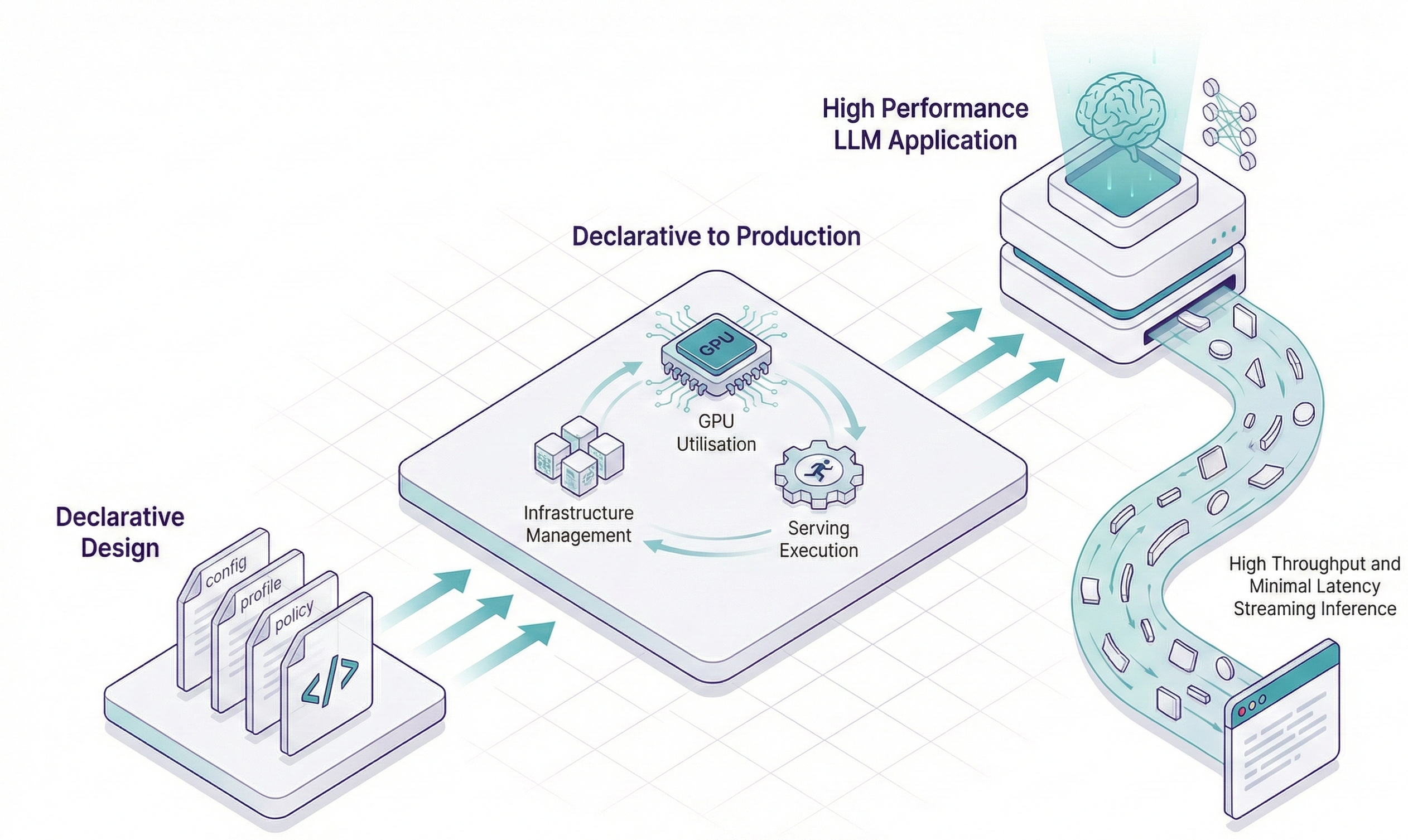

From declarative design to production speed

INTELLITHING translates declarative configurations into fully provisioned, application ready deployments. We handle low level infrastructure, GPU utilisation and serving execution so models run with minimal latency and maximum throughput. Even open source models deliver an enterprise quality streaming experience.

Out-of-the-box APIs

Every INTELLITHING deployment is delivered with an enterprise ready API surface from day one. This includes low latency, high throughput streaming inference endpoints for both full applications and standalone model services, so your teams can integrate immediately without building bespoke serving layers. Alongside inference, deployments expose built in trigger activation and session management APIs, enabling secure automation or production grade chat experiences at scale. The result is a complete, consumable product interface automatically shipped with every deployment, not an additional engineering project.

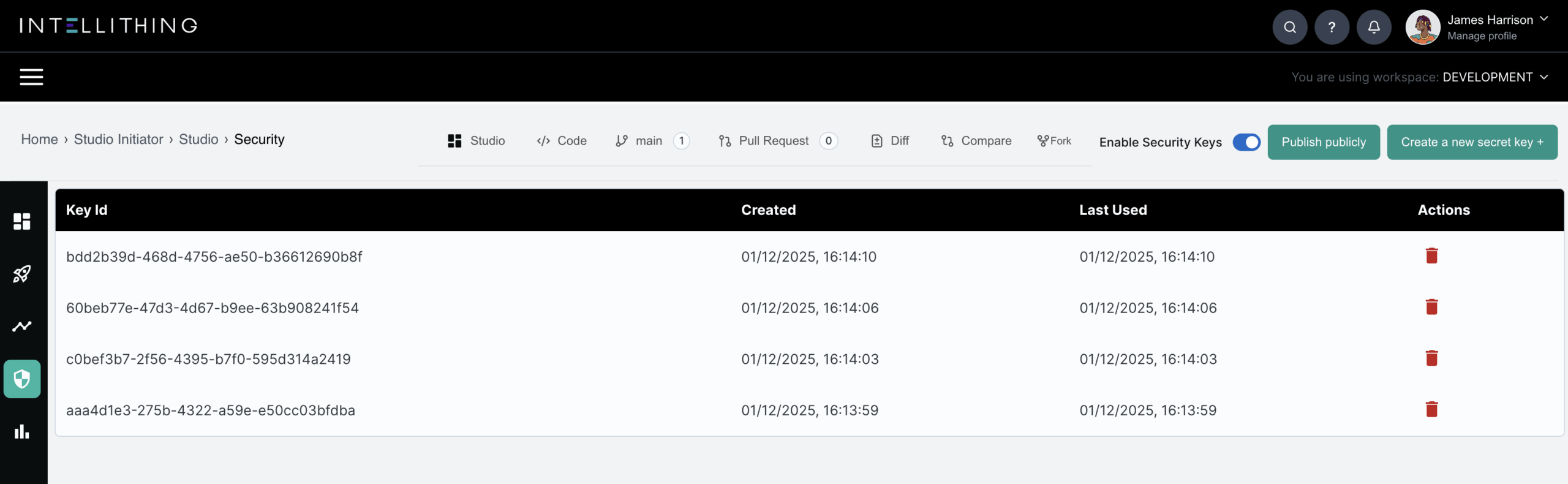

Enterprise API Key Provisioning

INTELLITHING automatically provisions enterprise-grade API credentials for every application you deploy, including inference endpoints. Each deployment is secured with a unique public and private key pair, enabling signed, verifiable requests and robust service to service trust without custom security engineering.

Keys are generated, stored and governed within your VPC, giving teams a secure default for authentication, easy rotation, and clear auditability for every external or internal API call.

Declarative Deployment

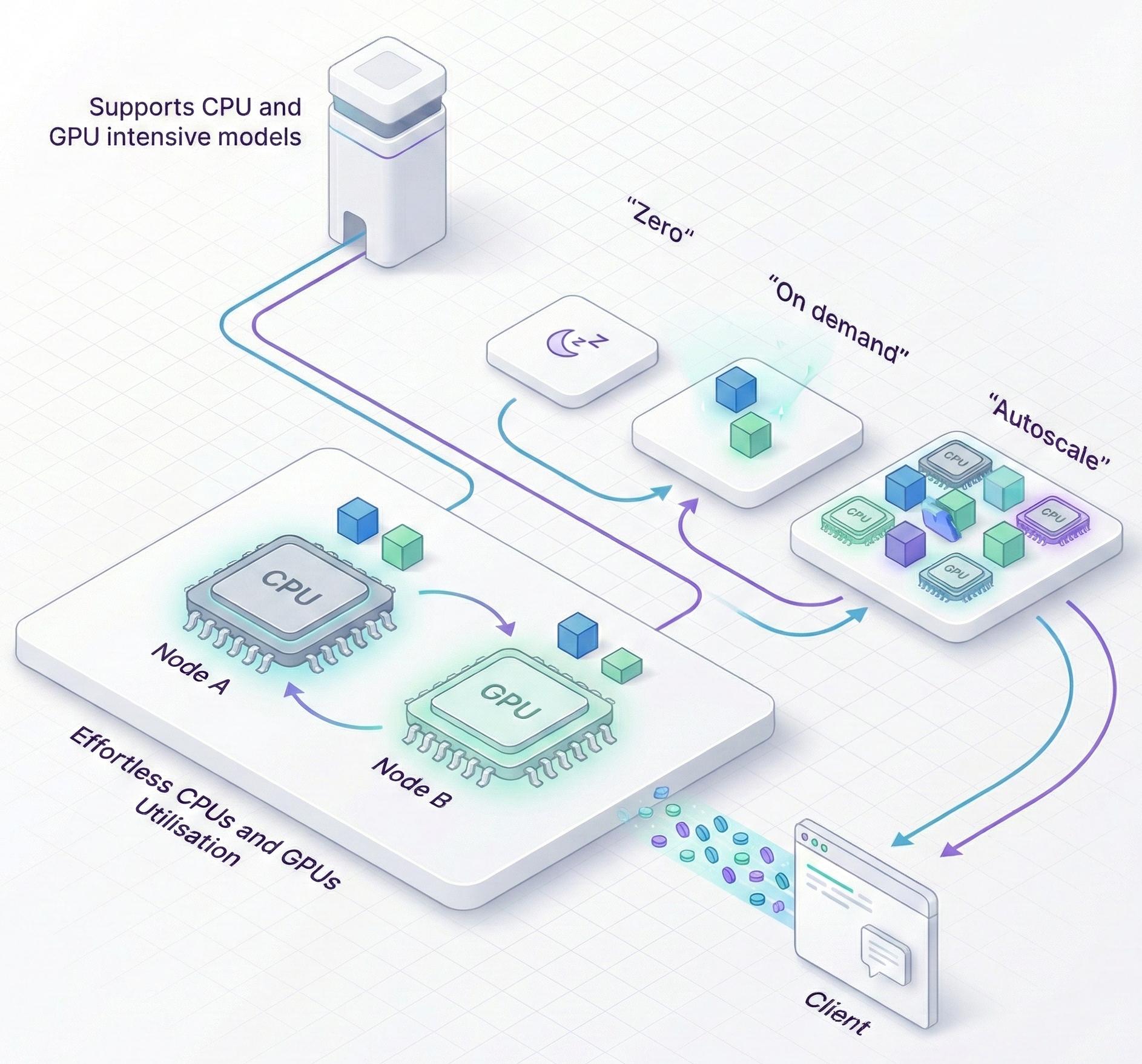

Effortless CPUs/GPUs Utilisation

Supports both CPU and GPU intensive models:

Run everything from lightweight prediction services to large scale LLM and deep learning workloads, with the right compute automatically allocated for each deployment.

Scale to zero or autoscale on demand:

Eliminate idle cost when traffic is low and scale instantly under load, ensuring consistent throughput and low latency without manual intervention.

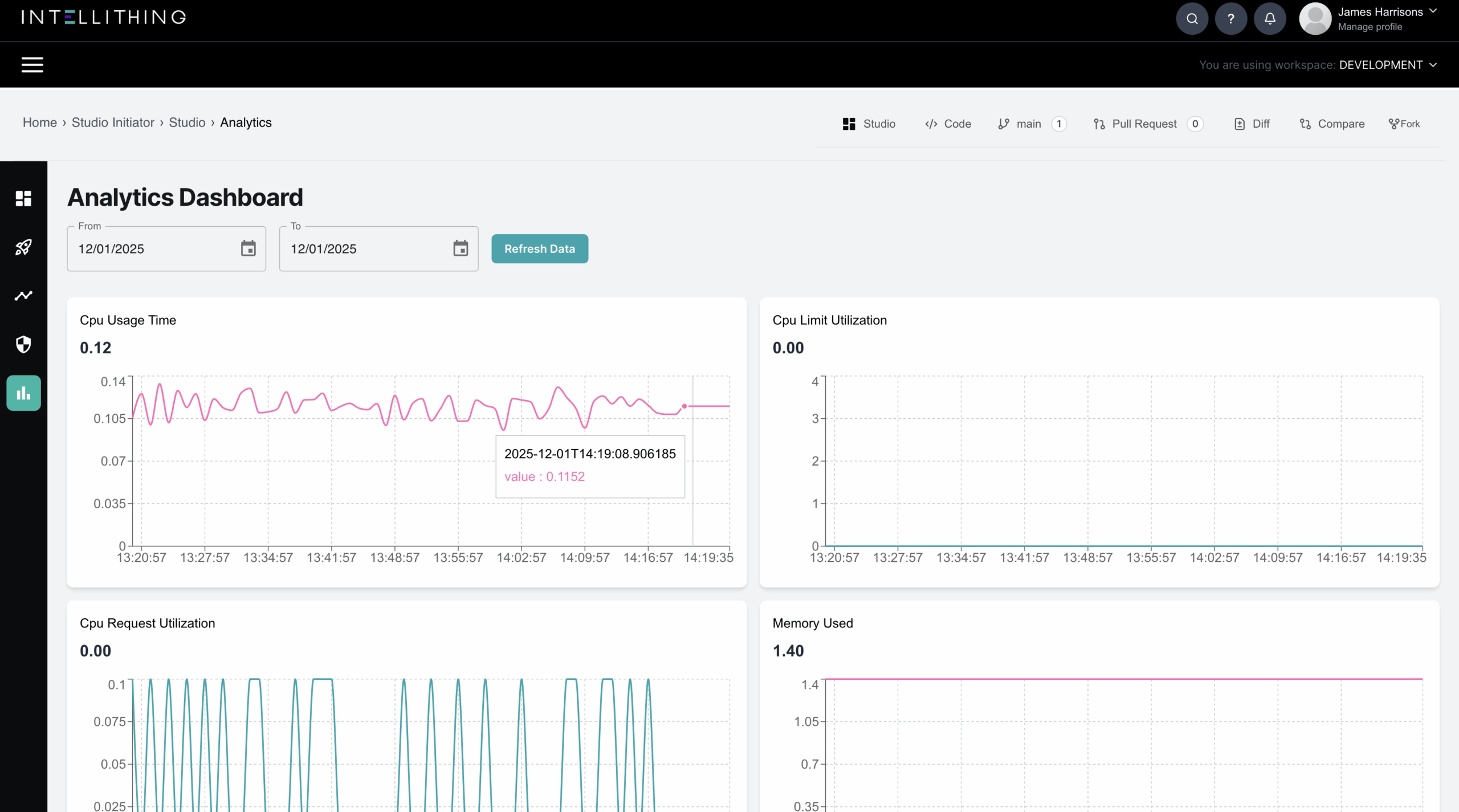

Full Observability & Monitoring

Monitoring and observability in INTELLITHING are designed for operational confidence at enterprise scale. The Analytics panel provides a live, unified view of every deployment, showing real time performance and usage signals as applications run in production. Teams can immediately see how a service is behaving under load, how resources are being consumed.

Secure by Design

Data never leaves your environment. Identity and access are enforced at every step.

Enterprise Ready

INTELLITHING is designed so data never leaves your environment. The control and compute planes run entirely in your VPC, with fine-grained identity and access management, audit trails, and secure routing. We’ve embedded the principles of SOC 2 and ISO 27001 into our architecture from the start ensuring you’re compliance-ready today and certification-ready tomorrow.

Experience INTELLITHING in action.

Request a live demo or see how the Operating Layer transforms enterprise AI.